Is your business struggling to keep pace with the deluge of data generated by your Internet of Things (IoT) devices? Then, mastering RemoteIoT batch job processing on Amazon Web Services (AWS) is no longer optionalit's a business imperative.

In today's data-driven landscape, the volume of information streaming from IoT devices is exploding. Organizations across industries, from healthcare and manufacturing to agriculture and beyond, are leveraging IoT to gather invaluable insights, automate processes, and enhance decision-making. However, the sheer scale of this data presents a significant challenge: how do you efficiently and cost-effectively process it? AWS offers a comprehensive suite of services designed precisely for this purpose, making it easier than ever to harness the power of your IoT data. This article will delve deep into the world of RemoteIoT batch job processing on AWS, exploring best practices, practical strategies, and real-world examples to help you unlock the full potential of your IoT investments.

| Category | Details |

|---|---|

| Name | RemoteIoT Batch Job Processing |

| Core Technology | Integration of IoT devices with remote cloud-based systems, specifically leveraging AWS services. |

| Primary Function | Enables efficient data collection, analysis, and processing from geographically dispersed IoT devices. |

| Key Benefits | Improved data processing efficiency, reduced operational costs, enhanced scalability, and the ability to handle large datasets. |

| AWS Services Utilized | AWS Batch, AWS Lambda, Amazon EC2, Amazon S3, AWS IoT Core, AWS CloudWatch, AWS X-Ray. |

| Typical Use Cases | Healthcare (patient monitoring), Manufacturing (predictive maintenance), Agriculture (environmental monitoring), Smart Cities (traffic management). |

| Core Technologies | Data transformation, Batch processing, Data storage |

| Security Considerations | Data encryption, IAM role implementation, Regular security audits, adhering to least privilege principles. |

| Link | AWS Batch Official Documentation |

RemoteIoT, at its core, represents the convergence of the Internet of Things and cloud computing. Its about connecting IoT devices sensors, actuators, and a wide array of data-generating endpoints to cloud-based systems for data collection, analysis, and processing. These devices can be located anywhere: in a remote agricultural field gathering soil data, in a factory floor monitoring machine performance, or within a patients home tracking vital signs. The essence of RemoteIoT lies in the ability to extract meaningful insights from data generated at the edge, far from centralized data centers, and utilize these insights in real-time or near real-time.

- Deniece Cornejo Rise To Fame Career Impact

- Professor Matthew Goodwin His Wife A Closer Look At Their Life

The selection of AWS for RemoteIoT batch jobs stems from its inherent strengths in scalability, reliability, and cost-effectiveness. AWS Batch, in particular, simplifies the management of batch computing workloads, automatically provisioning the appropriate resources based on the specific requirements of each job. Consider this example: an agricultural company deploys numerous soil moisture sensors across a vast farmland. These sensors collect data periodically, perhaps every hour, generating a constant stream of information. This data is crucial for optimizing irrigation and maximizing crop yield. Instead of manually managing the processing of this data, the company could leverage AWS Batch. AWS Batch would receive data from the sensors, store it on S3, and then execute a custom-built data transformation script (written in Python, for instance) using EC2 instances. This script could clean the data, aggregate it, and identify any anomalies. By using AWS Batch, the company avoids the overhead of managing servers and can seamlessly scale the infrastructure as the number of sensors or the data volume grows, all while only paying for the resources utilized.

AWS Batch unlocks a variety of capabilities and efficiencies for RemoteIoT implementations. One of the most significant features is automatic scaling. AWS Batch dynamically adjusts the number of compute instances based on the number of jobs in the queue, ensuring that resources are efficiently utilized. If a surge of data arrives for instance, due to a weather event triggering increased sensor activity AWS Batch will automatically scale up the resources to handle the increased workload. As the load diminishes, the resources are scaled back down, ensuring cost-efficiency. Furthermore, AWS Batch integrates seamlessly with other AWS services such as S3 for data storage, Lambda for serverless function execution, and ECS for containerized applications. This integration streamlines the data pipeline, making it easier to build and manage complex IoT solutions. Finally, AWS Batch is designed to be cost-effective. Users only pay for the compute resources consumed, making it an economical choice for managing batch workloads. Spot instances, in particular, offer substantial cost savings compared to on-demand instances, providing a further incentive to embrace AWS Batch.

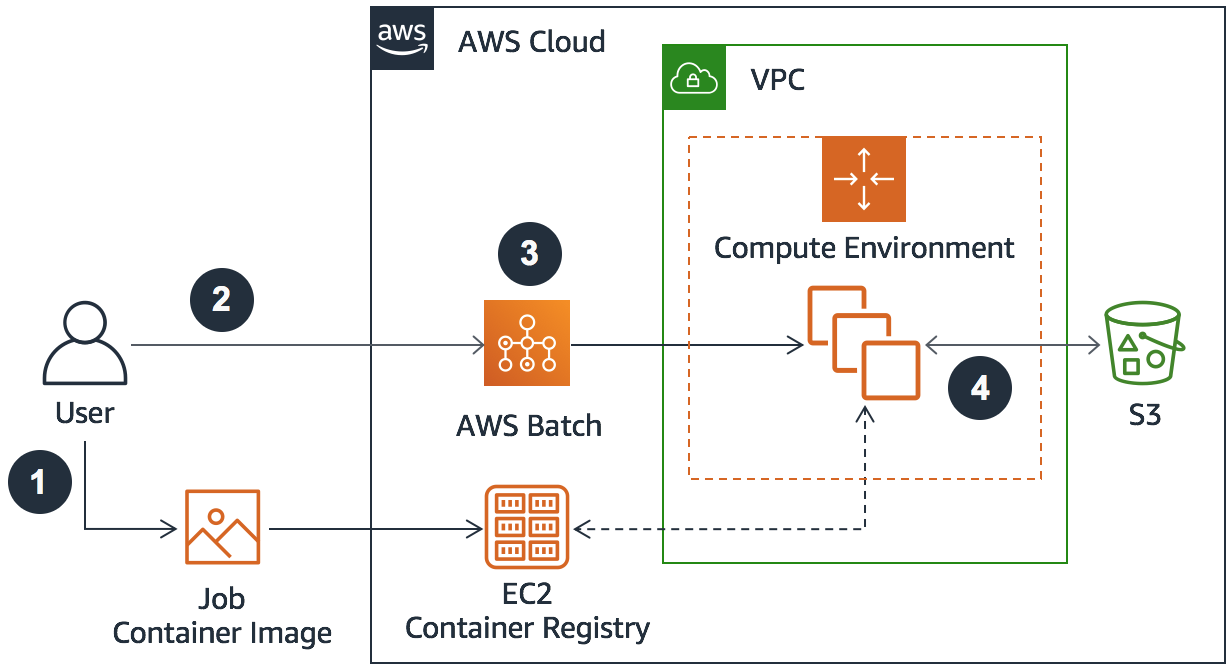

Getting started with RemoteIoT batch job processing on AWS requires a systematic approach, starting with the foundational setup of your AWS environment. This begins with creating an AWS account, if you don't already have one, and logging into the AWS Management Console. You'll then need to define Identity and Access Management (IAM) roles with appropriate permissions. These roles control what actions your batch jobs can perform within the AWS ecosystem. For example, a role might grant permissions to read data from S3 buckets, write to CloudWatch logs, and launch EC2 instances. Subsequently, configure AWS Batch itself. This involves creating compute environments, which define the type of compute resources available (e.g., EC2 instances), and job queues, which manage the order in which jobs are executed. Each step is crucial, setting the stage for the smooth operation of your RemoteIoT batch jobs.

- Tattoo Bible Verse Faith Inked On Skin Guide

- Hd Hub For You Your Ultimate Guide To Streaming Entertainment

The processing of IoT data, once collected, often forms the crux of the value derived from these systems. Raw data from IoT devices typically arrives in unstructured or semi-structured formats and often needs significant preprocessing before analysis. This preprocessing step is where batch jobs excel. The general workflow starts with the collection of data from the IoT devices. This can be achieved using AWS IoT Core, which provides a secure and scalable way to connect devices to the cloud. Data is then typically stored in S3 buckets, providing durable and readily accessible storage for further processing. Next, AWS Batch is employed to execute data transformation scripts. These scripts, written in programming languages like Python, Java, or others, perform tasks like cleaning data, converting formats, aggregating data, and enriching the data with contextual information. The output of these scripts is then often stored back in S3, ready for downstream analytics or visualization.

Success with RemoteIoT batch jobs hinges on following best practices designed to optimize performance, minimize costs, and ensure security. Resource allocation is paramount. Utilize spot instances, which offer significant cost savings compared to on-demand instances, for tasks where the interruption is acceptable. This can dramatically reduce the operational expenses. Implement careful monitoring of job queues to identify any bottlenecks that may slow down processing. CloudWatch can be used to track various metrics, like the number of jobs queued, the duration of job runs, and the resource utilization. Use the monitoring to identify optimization opportunities. Furthermore, dynamically scale resources based on the workload. Implement auto-scaling policies that automatically adjust the number of compute resources available based on the number of jobs in the queue or other relevant metrics. This ensures that the system can handle fluctuating workloads without manual intervention.

As your IoT deployment expands, the volume of data that needs to be processed will inevitably increase. Scaling your batch jobs is essential to maintaining optimal performance and ensuring that your system can keep up with the growing demand. Several strategies can be implemented to achieve this. Implement auto-scaling policies for compute resources. AWS Batch and other AWS services, such as EC2 Auto Scaling, allow you to automatically adjust the number of compute instances based on the demand. This is dynamic adjustment of compute resources, ensuring that jobs are processed efficiently without wasting money. Use AWS CloudWatch to monitor metrics and trigger scaling actions. CloudWatch enables you to set up alarms based on various metrics, like queue depth or CPU utilization. These alarms can trigger scaling actions, such as increasing or decreasing the number of compute instances. Optimize job definitions to improve resource utilization. Properly configured job definitions can significantly impact performance. Ensure that jobs request the appropriate amount of resources and that the container images used for job execution are optimized for performance.

The continuous monitoring and optimization of your batch jobs are essential for identifying and addressing potential issues, ensuring smooth operation, and maximizing the value of your data processing efforts. Several AWS tools can assist in this process. AWS CloudWatch provides a powerful platform for real-time monitoring of job metrics. You can track metrics such as job status, duration, resource utilization, and error rates. CloudWatch allows you to create dashboards to visualize these metrics and set up alerts to notify you of any anomalies. The AWS Batch Console offers a user-friendly interface for managing your batch jobs. You can view the status of jobs, monitor progress, access logs, and manage job queues. This console provides a central point of control for all your batch job operations. AWS X-Ray helps trace and debug batch job performance issues. X-Ray enables you to trace requests as they travel through your application, allowing you to identify performance bottlenecks and diagnose errors. It provides insights into the various components involved in your batch jobs and how they interact.

Protecting the security of your IoT data is critical, especially given the sensitive nature of the information that many IoT devices collect. Implementing robust security measures is crucial to safeguard your data and comply with relevant regulatory standards. Always encrypt data both in transit and at rest. Use encryption to protect your data as it is being transferred between devices and the cloud, and when it is stored in S3 or other storage services. AWS offers various encryption options, including server-side encryption and client-side encryption. Use IAM roles with the least privilege access. IAM roles define the permissions that your batch jobs have within AWS. Always grant only the minimum necessary permissions required for your jobs to function. This principle of least privilege helps to minimize the potential damage from any security breaches. Regularly audit and update security policies. Regularly review and update your security policies, IAM roles, and other security configurations to ensure they remain effective and aligned with best practices. This can involve penetration testing, security audits, and regular updates to your security practices.

The application of RemoteIoT batch job processing is widespread across a variety of industries, each leveraging the technology to unlock specific advantages. In healthcare, RemoteIoT enables continuous patient monitoring through devices that track vital signs like heart rate, blood pressure, and glucose levels. Batch jobs then process this data to generate insights that help improve patient care, identify potential health risks, and even provide early warnings of deteriorating health conditions. In manufacturing, IoT sensors are used to track machine performance. Batch jobs analyze this data to predict maintenance needs, optimize production schedules, and identify potential defects, leading to improved efficiency and reduced downtime. Agriculture uses RemoteIoT to monitor soil moisture, weather conditions, and crop health. Batch jobs process this data to optimize irrigation, fertilizer use, and overall farming practices, leading to higher yields and reduced resource consumption. Smart cities use RemoteIoT to monitor traffic flow, air quality, and waste management. Batch jobs analyze this data to optimize traffic patterns, improve air quality, and streamline waste collection, leading to a more efficient and sustainable urban environment. In environmental science, RemoteIoT systems are used to monitor air and water quality, track wildlife populations, and measure environmental changes. Batch jobs process this data to provide valuable insights into environmental trends and support conservation efforts.

- Super 30 Where To Watch Why It Matters Guide

- Damian Musk Unveiling The Entrepreneurial Path Ventures